Jetson Orin Nano Solves Entry-Level Edge AI Challenges – delivering up to 80X performance of Jetson Nano.

NVIDIA recently launched the new Jetson Orin Nano system-on-modules that set a new standard for entry-level edge AI and robotics.

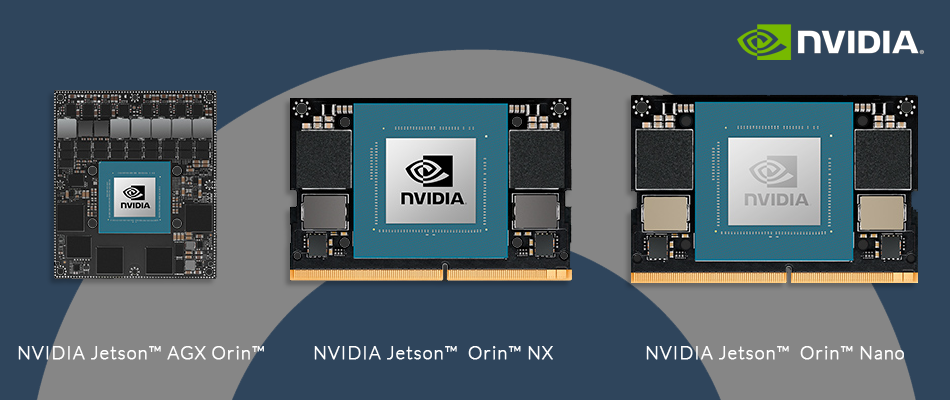

For the first time, the NVIDIA Jetson family spans six Orin-based production modules to support a full range of Edge AI and robotics applications.

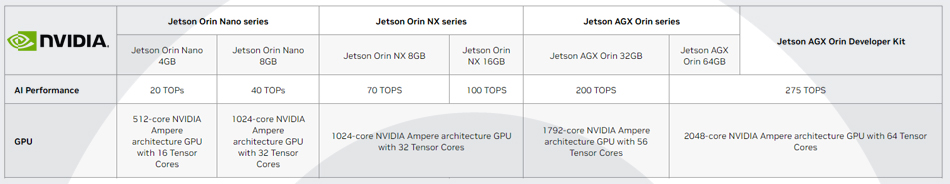

This includes the Orin Nano — which delivers up to 40 trillion operations per second (TOPS) of AI performance in the smallest Jetson form factor — up to the AGX Orin, delivering 275 TOPS for advanced autonomous machines.

The Jetson Orin features an NVIDIA Ampere architecture GPU, Arm-based CPUs, next-generation deep learning and vision accelerators, high-speed interfaces, fast memory bandwidth and multimodal sensor support.

This performance and versatility empowers more customers to commercialise products that once seemed impossible, from engineers deploying edge AI applications to Robotics Operating System (ROS) developers building next-generation intelligent machines.

“Over 1,000 customers and 150 partners have embraced Jetson AGX Orin since NVIDIA announced its availability just six months ago, and Orin Nano will significantly expand this adoption,” said Deepu Talla, vice president of embedded and edge computing at NVIDIA. “With an orders-of-magnitude increase in performance for millions of edge AI and ROS developers today, Jetson Orin is the ideal platform for virtually every kind of robotics deployment imaginable.”

Making Edge AI and Robotics More Accessible

The Orin Nano modules are form-factor and pin-compatible with the previously announced Orin NX modules. Full emulation support allows customers to get started developing for the Orin Nano series today using the AGX Orin developer kit. This gives customers the flexibility to design one system to support multiple Jetson modules and easily scale their applications.

Orin Nano supports multiple concurrent AI application pipelines with high-speed I/O and an NVIDIA Ampere architecture GPU. Developers of entry-level devices and applications such as retail analytics and industrial quality control benefit from easier access to more complex AI models at lower cost.

The Orin Nano modules will be available in two versions with the Orin Nano 8GB able to deliver up to 40 TOPS with power configurable from 7W to 15W, while the 4GB version delivers up to 20 TOPS with power options as low as 5W to 10W.

The Jetson Orin platform is designed to solve the toughest robotics challenges and brings accelerated computing to over 700,000 ROS developers. Combined with the powerful hardware capabilities of Orin Nano, enhancements in the latest NVIDIA Isaac software for ROS put increased performance and productivity in the hands of roboticists.

Strong Ecosystem and Software Support

Jetson Orin has seen broad support across the robotics and embedded computing ecosystem, including from Canon, John Deere, Microsoft Azure, Teradyne, TK Elevator and many more.

The NVIDIA Jetson ecosystem is growing rapidly, with over 1 million developers, 6,000 customers — including 2,000 startups — and 150 partners. Jetson partners offer a wide range of support from AI software, hardware and application design services to cameras, sensors and peripherals, developer tools and development systems.

NVIDIA Jetson Family Orin-based Production Modules

Jetson Orin Nano 8GB and 4GB Modules

NVIDIA Jetson Orin Nano series modules deliver up to 40 TOPS of AI performance in the smallest Jetson form-factor, with power options between 5W and 15W. This gives you up to 80X the performance of NVIDIA Jetson Nano and sets the new standard for entry-level edge AI.

Both Jetson Orin Nano modules will be available in Jan. 2023

Jetson AGX Orin 64GB and 32GB Modules

NVIDIA Jetson AGX Orin modules deliver up to 275 TOPS of AI performance with power configurable between 15W and 60W. This gives you up to 8X the performance of Jetson AGX Xavier in the same compact form factor for robotics and other autonomous machine use cases.

The Jetson AGX Orin 32GB module is available now.

Jetson AGX Orin 64GB will be available Dec. 2022.

Jetson Orin NX 16GB and 8GB Modules

Jetson Orin NX modules deliver up to 100 TOPS of AI performance in the smallest Jetson form factor, with power configurable between 10W and 25W. This gives you up to 3X the performance of Jetson AGX Xavier and up to 5X the performance of Jetson Xavier NX.

Jetson Orin NX 16GB will be available in Dec. 2022.

Jetson Orin NX 8GB will be available in Jan. 2023.

An In Depth look at Jetson Orin Nano 8GB and 4GB Modules

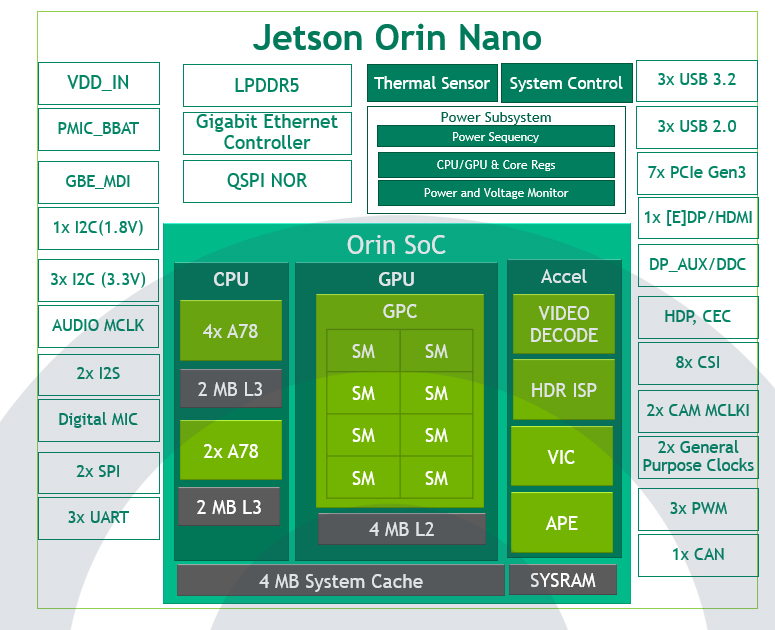

*NVIDIA Orin Architecture from Jetson Orin Nano 8GB, Jetson Orin Nano 4GB has 2 TPCs and 4 SMs.

As shown in Figure 1, Jetson Orin Nano showcases the NVIDIA Orin architecture with an NVIDIA Ampere Architecture GPU. It has up to eight streaming multiprocessors (SMs) composed of 1024 CUDA cores and up to 32 Tensor Cores for AI processing.

The NVIDIA Ampere Architecture third-generation Tensor Cores deliver better performance per watt than the previous generation and bring more performance with support for sparsity. With sparsity, you can take advantage of the fine-grained structured sparsity in deep learning networks to double the throughput for Tensor Core operations.

To accelerate all parts of your application pipeline, Jetson Orin Nano also includes a 6-core Arm Cortex-A78AE CPU, video decode engine, ISP, video image compositor, audio processing engine, and video input block.

Within its small, 70x45mm 260-pin SODIMM footprint, the Jetson Orin Nano modules include various high-speed interfaces:

- Up to seven lanes of PCIe Gen3

- Three high-speed 10-Gbps USB 3.2 Gen2 ports

- Eight lanes of MIPI CSI-2 camera ports

- Various sensor I/O

To reduce your engineering effort, we’ve made the Jetson Orin Nano and Jetson Orin NX modules completely pin– and form-factor–compatible. Table 1 shows the differences between the Jetson Orin Nano 4GB and the Jetson Orin Nano 8GB.

| Specifications | Jetson Orin Nano 4GB | Jetson Orin Nano 8GB |

| AI Performance | 20 Sparse TOPs | 10 Dense TOPs | 40 Sparse TOPs | 20 Dense TOPs |

| GPU | 512-core NVIDIA Ampere Architecture GPU with 16 Tensor Cores | 1024-core NVIDIA Ampere Architecture GPU with 32 Tensor Cores |

| GPU Max Frequency | 625 MHz | |

| CPU | 6-core Arm Cortex-A78AE v8.2 64-bit CPU 1.5 MB L2 + 4 MB L3 | |

| CPU Max Frequency | 1.5 GHz | |

| Memory | 4GB 64-bit LPDDR5 34 GB/s | 8GB 128-bit LPDDR5 68 GB/s |

| Storage | – | |

| (Supports external NVMe) | ||

| Video Encode | 1080p30 supported by 1-2 CPU cores | |

| Video Decode | 1x 4K60 (H.265) | 2x 4K30 (H.265) | 5x 1080p60 (H.265) | 11x 1080p30 (H.265) | |

| Camera | Up to 4 cameras (8 through virtual channels*) 8 lanes MIPI CSI-2 D-PHY 2.1 (up to 20 Gbps) | |

| PCIe | 1 x4 + 3 x1 (PCIe Gen3, Root Port, & Endpoint) | |

| USB | 3x USB 3.2 Gen2 (10 Gbps) 3x USB 2.0 | |

| Networking | 1x GbE | |

| Display | 1x 4K30 multimode DisplayPort 1.2 (+MST)/e DisplayPort 1.4/HDMI 1.4* | |

| Other I/O | 3x UART, 2x SPI, 2x I2S, 4x I2C, 1x CAN, DMIC and DSPK, PWM, GPIOs | |

| Power | 5W – 10W | 7W – 15W |

| Mechanical | 69.6 mm x 45 mm 260-pin SO-DIMM connector | |

| Price | $199† | $299† |

* For more information about additional compatibility to DisplayPort 1.4a and HDMI 2.1 and virtual channels, see the Jetson Orin Nano series data sheet. † 1KU Volume

For more information about supported features, see the Software Features section of the latest NVIDIA Jetson Linux Developer Guide.

Performance benchmarks with Jetson Orin Nano

With Jetson AGX Orin, NVIDIA is leading the inference performance category of MLPerf. Jetson Orin modules provide a giant leap forward for your next-generation applications, and now the same NVIDIA Orin architecture is made accessible for entry-level AI devices.

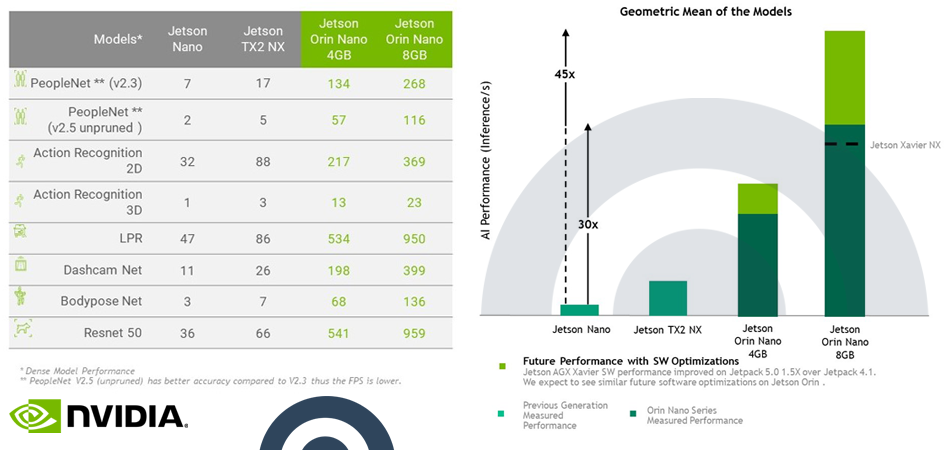

NVIDIA used emulation mode with NVIDIA JetPack 5.0.2 to run computer vision benchmarks with Jetson Orin Nano, and the results showcase how it sets the new standard. Testing included some of our dense INT8 and FP16 pretrained models from NGC, and a standard ResNet-50 model. They also ran the same models for comparison on Jetson Nano, TX2 NX, and Xavier NX.

Here is the full list of benchmarks:

- NVIDIA PeopleNet v2.3 for pruned people detection, and NVIDIA PeopleNet v2.5 for the highest accuracy people detection

- NVIDIA ActionRecognitionNet 2D and 3D models

- NVIDIA LPRNet for license plate recognition

- NVIDIA DashCamNet, BodyPoseNet for multiperson human pose estimation

- ResNet-50 (224×224) Model

Taking the geomean of these benchmarks, Jetson Orin Nano 8GB shows a 30x performance increase compared to Jetson Nano. With future software improvements, NVIDIA expect this to approach a 45x performance increase. Other Jetson devices have increased performance 1.5x since their first supporting software release, and NVIDIA expect the same with Jetson Orin Nano.